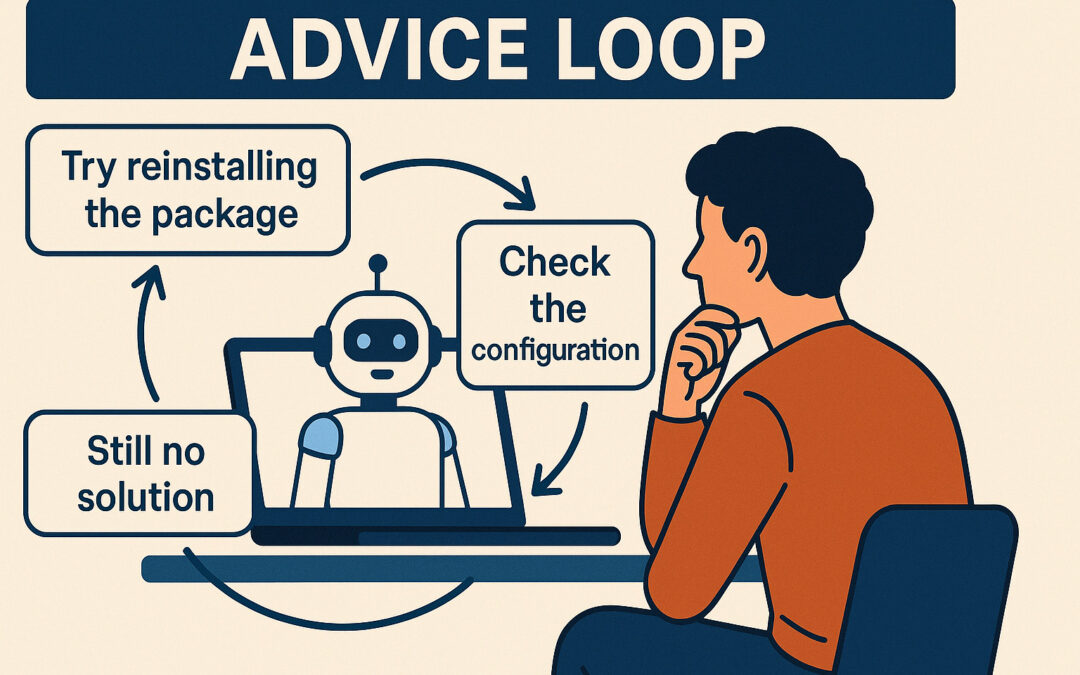

Have you ever found yourself in a strange loop with ChatGPT, Gemini, or any other generative AI tool? You enter a problem, get a suggestion, try it out, and… it doesn’t work. You tell the AI what happened, and it offers a slightly different suggestion. You try that, too. Still no solution. The cycle repeats. Welcome to the Advice Loop.

What Is the „GenAI Advice Loop“?

An Advice Loop occurs when a generative AI system repeatedly offers new pieces of advice, solutions, or troubleshooting steps, but none of them actually solve your problem. The AI isn’t getting “stuck” in the classic programming sense—there’s no error or system crash. Instead, you simply cycle through variations of the same kind of advice, over and over, without making real progress.

What Does an Advice Loop Look Like?

Let’s say you have a technical issue with a Python script.

You paste your code and the error message into ChatGPT. It suggests you “check the indentation.” You do. No fix.

You paste the updated error into the chat. Now it says, “try reinstalling the package.” You do. Still broken.

You explain what you’ve tried. It suggests “clearing the cache,” then “renaming the file,” then “checking your Python version.”

Each piece of advice is plausible, but nothing gets you closer to a real solution.

This is an Advice Loop: the AI is generating suggestions, but you’re going nowhere.

Why Does This Happen?

Generative AIs like ChatGPT don’t truly “understand” problems the way humans do. They can only work with the data and context you provide. When the AI can’t identify a unique or specific root cause, it falls back on surface-level troubleshooting steps or generic advice.

Instead of saying, “I don’t know,” it keeps offering new suggestions—sometimes slightly reworded, sometimes only marginally different. The result: endless, unproductive loops.

Advice Loop vs. Feedback Loop

It’s important to note that an Advice Loop is not the same as a feedback loop or model collapse (where outputs recursively degrade in quality as they’re fed back into the model).

Here, the loop is about the user experience:

- The user keeps getting plausible, but unhelpful advice.

- The AI is not introducing errors, nor is it repeating itself exactly; it’s just failing to reach a solution.

Why Should You Care?

Advice Loops are a major source of frustration for users who expect generative AI to “think outside the box” or deliver real solutions. They highlight the current limitations of AI troubleshooting and advice—especially in ambiguous, context-heavy, or non-standard situations.

How to Break Out of an Advice Loop

- Reframe your prompt: Try providing more detailed or alternative context.

- Ask for alternative approaches: Instead of “What should I do next?”, try “What is the root cause of this?” or “What information are you missing to help me further?”

- Take a break: Sometimes, switching tools or simply stepping away can help you spot the missing piece yourself.

- Consult a human: When AI suggestions keep spinning in circles, reaching out to a human expert can often resolve the deadlock.

Should We Give the „Advice Loop“ a Name?

This phenomenon is widespread, but hasn’t really been named or described in depth—until now.

Let’s call it what it is: The Advice Loop.

It’s the cycle of plausible, well-intentioned, but ultimately unproductive advice that generative AI offers when it doesn’t actually know the answer.

The Emotional Trap of the Advice Loop

One of the trickiest aspects of the Advice Loop is how emotionally convincing it feels. Each time the AI suggests something new, you get a little spark of hope. Maybe this time, it’ll work. Maybe now you’re finally getting closer to a solution.

You follow the advice, you tweak your code, you change the settings, you wait for a breakthrough… But nothing changes. It’s like taking two steps forward and two steps back. The AI feels like it’s helping, but you’re just running in place.

My Real-Life Example

I recently spent hours stuck in such an Advice Loop with OpenAI’s GPT-4.1. I had a complex technical problem and poured tens of thousands of words into a chat thread, carefully updating the AI with every step I took. It always gave plausible next steps, but never hit the mark.

Finally, I copied the entire conversation – the massive, detailed context – and pasted it into Google Gemini 2.5 Pro. To my surprise, Gemini quickly spotted the core issue and pointed me in the right direction.

Why did it work? Maybe Gemini’s model could handle longer or more complex context. Maybe its problem-solving approach is different. The lesson for me: sometimes the best way out of an Advice Loop is to change tools – or at least get a second opinion.

Final Thoughts

As AI becomes more central to our workflows, understanding its strengths and its limitations is critical. The Advice Loop is a useful concept for users, developers, and researchers alike:

- For users, it helps identify when you’re “going in circles.”

- For developers, it’s a prompt to improve how AI tools acknowledge their limits.

- For researchers, it’s an open question: how can we help AI break out of advice loops and actually deliver value?

💬 Have you experienced the Advice Loop with generative AI? Share your stories or thoughts!